How to Fine-tune a Model Like ChatGPT in Spanish Using Alpaca-LoRA

ChatGPT is one of the most advanced language models available today, capable of producing human-like responses to a wide range of natural language inputs, including conversations, articles, and even creative writing. It has been trained on a massive amount of diverse data, allowing it to understand and generate text in a wide range of topics and styles.

Due to its exceptional performance, ChatGPT has been widely adopted for various applications, such as language translation, chatbots, and content generation. As a result, it has become an essential tool for many businesses, researchers, and developers who are looking to leverage the power of natural language processing in their work.

But did you know that you can fine-tune ChatGPT for specific use cases? In this guide, we’ll explore how you can do just that using Alpaca-LoRA.

What is that? Alpaca is an LLM based on Meta AI’s LLaMA 7B model, with 7 billion parameters, and Low-rank adaptation (LoRA) is a technique for fine-tuning model which leverage much smaller models and more memory efficient, which means that we don’t need as bigger GPU to be able to do the training, text generation, etc.

In this blogpost you will learn how you can fine-tune ChatGPT for Spanish with Alpaca-LoRA and take your NLP skills to the next level!

“Are you interested in learning more about data science and advancing your career?

Look no further than Pluralsight!

Don’t miss out on this opportunity to take your career to the next level.”

Visit: https://pluralsight.pxf.io/DVX5jy today to get started!

1. The Data

The Stanford Alpaca project fine-tuned the Llama model using a dataset consisting of over 52,000 records in English. For more information on this dataset, please refer to the official data repository here.

To give you an idea of what the training data looks like, here’s an example:

{

"instruction": "Give three tips for staying healthy.",

"input": "",

"output": "1.Eat a balanced diet and make sure to include plenty of fruits and vegetables. \n2. Exercise regularly to keep your body active and strong. \n3. Get enough sleep and maintain a consistent sleep schedule."

},

{

"instruction": "What are the three primary colors?",

"input": "",

"output": "The three primary colors are red, blue, and yellow."

},

{

"instruction": "Describe the structure of an atom.",

"input": "",

"output": "An atom is made up of a nucleus, which contains protons and neutrons, surrounded by electrons that travel in orbits around the nucleus. The protons and neutrons have a positive charge, while the electrons have a negative charge, resulting in an overall neutral atom. The number of each particle determines the atomic number and the type of atom."

},In this blogpost we will fine tunig the model for Spanish so we need to translate the dataset, you can select any method you already know for translation.

Below is an snipet code showing the library I used to translate the dataset:

from easygoogletranslate import EasyGoogleTranslate

import json

import glob

import tqdm

import concurrent.futures

translator = EasyGoogleTranslate(

source_language='en',

target_language='es',

timeout=10

)

def load_file(file):

with open(file, "r") as f:

return json.load(f)

files = glob.glob("/alpacadata/*.json")

files = sorted(files, key=lambda x: int(x.replace(".json", "").split("_")[-1]))

items = []

with concurrent.futures.ProcessPoolExecutor(max_workers=100) as executor:

futures = [executor.submit(load_file, file) for file in files]

for future in tqdm.tqdm(concurrent.futures.as_completed(futures), total=len(futures)):

items.append(future.result())

with open("/alpaca-translated/alpaca_data_translated.json", "w") as f:

json.dump(items, f, indent=4, ensure_ascii=False)

The translation process generates a file to train our model named: “alpaca_data_translated.json”.

Remember that You can find all the code in this repo.

Now we load the data as a torch dataset:

from datasets import load_dataset, Dataset

data = load_dataset("json", data_files="alpaca_data_translated.json")

data = data.shuffle()

2. The Model:

2.1 Definition

We are fine tuning the Stanford Alpaca Lora which is a pre-trained language model developed by researchers at Stanford University, which is based on the LLaMA LLM model released by Meta. The specific task, in this case, is casual Language Modeling task (LLM).

To fine-tune the Stanford Alpaca Lora for the casual LLM task, we need to define a specific model architecture and tokenizer. Both are taken from HuggingFace:

model = LLaMAForCausalLM.from_pretrained(

"decapoda-research/llama-7b-hf",

load_in_8bit=True,

device_map="auto",

)

tokenizer = LLaMATokenizer.from_pretrained(

"decapoda-research/llama-7b-hf", add_eos_token=True

)

model = prepare_model_for_int8_training(model)

config = LoraConfig(

r=LORA_R,

lora_alpha=LORA_ALPHA,

target_modules=["q_proj", "v_proj"],

lora_dropout=LORA_DROPOUT,

bias="none",

task_type="CAUSAL_LM",

)

model = get_peft_model(model, config)

tokenizer.pad_token_id = 0

2.2 The prompt format creation

Time to prepares data for the task. To do this, the data is tokenized and a prompt format is added.

A function called generate_prompt is created for this purpose, which takes a data_point as input. If the data_point has an “input” key, the function returns a string that includes an instruction, input, and output field. If there is no “input” key, it only returns a string with an instruction and output field.

After this step, the tokenizer is applied to tokenize the generate_prompt output.

The resulting tokenized data is stored in the train_data and val_data variables:

def generate_prompt(data_point):

if data_point["input"]:

return f"""Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{data_point["instruction"]}

### Input:

{data_point["input"]}

### Response:

{data_point["output"]}"""

else:

return f"""Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{data_point["instruction"]}

### Response:

{data_point["output"]}"""

def tokenize(prompt):

result = tokenizer(

prompt,

truncation=True,

max_length=CUTOFF_LEN + 1,

padding="max_length",

)

return {

"input_ids": result["input_ids"][:-1],

"attention_mask": result["attention_mask"][:-1],

}

train_data = train_data.shuffle().map(lambda x: tokenize(generate_prompt(x)))

val_data = val_data.shuffle().map(lambda x: tokenize(generate_prompt(x)))

2.3 The trainer definition

Now we are going to set up a training loop using the HuggingFace transformers library. The trainer object is initialized with the following parameters:

model: The Alpaca model to be fine-tuned

train_dataset: the training dataset for the model 50k

eval_dataset: the validation dataset for the model 2k

args: a TrainingArguments object containing varikous hyperparameters and settings for the training loop, including the batch size, number of training epochs, learning rate, and logging/evaluation/saving strategies.

data_collator: a DataCollatorForLanguageModeling object that is responsible for batching and processing the training data before feeding it to the model.

The model.config.use_cache = False line turns off the use of internal cache, which can help avoid memory issues when training large models.

trainer = transformers.Trainer(

model=model,

train_dataset=train_data,

eval_dataset=val_data,

args=transformers.TrainingArguments(

per_device_train_batch_size=MICRO_BATCH_SIZE,

gradient_accumulation_steps=GRADIENT_ACCUMULATION_STEPS,

warmup_steps=100,

num_train_epochs=EPOCHS,

learning_rate=LEARNING_RATE,

fp16=True,

logging_steps=20,

evaluation_strategy="steps",

save_strategy="steps",

eval_steps=200,

save_steps=200,

output_dir="sp-lora-alpaca",

save_total_limit=3,

load_best_model_at_end=True,

ddp_find_unused_parameters=False if ddp else None,

),

data_collator=transformers.DataCollatorForLanguageModeling(tokenizer, mlm=False),

)

model.config.use_cache = False

3. Training:

Finally time to train the model, for that we are using the trainer object, which will iterate through the training data in batches, update the model’s weights based on the loss calculated on each batch, and evaluate the model’s performance on the validation set periodically. The training loop will continue until the specified number of epochs:

model.config.use_cache = False

trainer.train(resume_from_checkpoint=False)

and the training must start:

4. Evaluation of the Model:

4.1 Merge the weights

When the models is trained and saved, to generate text we need to load the model from Llama with our customs weight:

tokenizer = LLaMATokenizer.from_pretrained("decapoda-research/llama-7b-hf")

model_custom = LLaMAForCausalLM.from_pretrained(

"decapoda-research/llama-7b-hf",

load_in_8bit=True,

device_map="auto",

)

model_custom = PeftModel.from_pretrained(model_custom, "sp-lora-alpaca")

4.2 Define the generation function

Next we need to define a function that decodes the generated sequences and prints out the response. The response is obtained after decoding the sequence with the tokenizer.decode() method and splitting it by the ###.

Overall, the function generates a response from the language model based on a given prompt and returns the response as a string:

generation_config = GenerationConfig(

temperature=0.1,

top_p=0.75,

num_beams=4,

)

def evaluate(model_aaa, instruction, input=None):

prompt = generate_instruction_prompt(instruction, input)

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].cuda()

generation_output = model_aaa.generate(

input_ids=input_ids,

generation_config=generation_config,

return_dict_in_generate=True,

output_scores=True,

max_new_tokens=256

)

for s in generation_output.sequences:

output = tokenizer.decode(s)

print("Response:", output.split("### Response:")[1].strip())

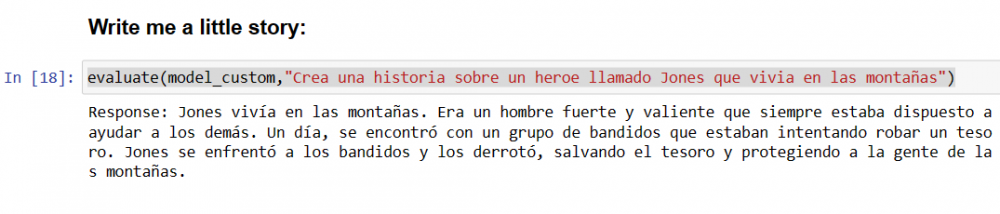

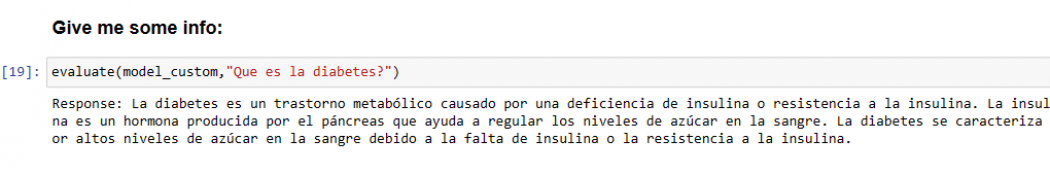

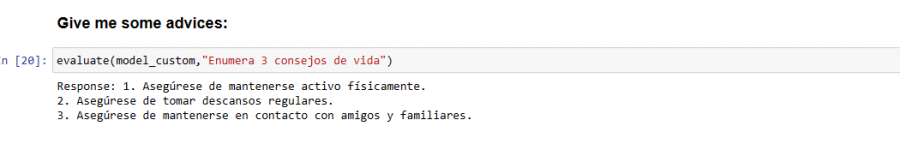

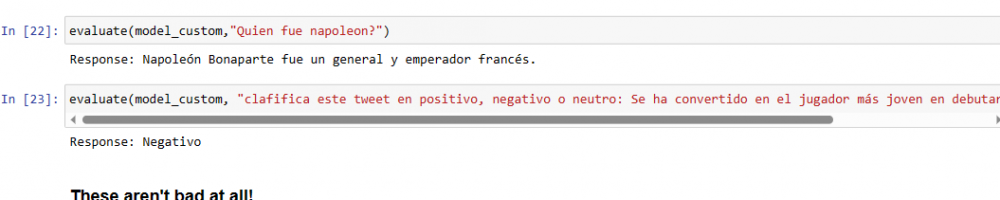

4.2 Time to generate text

Finally let’s check how our model perfoms with some prompts:

Final Words:

In summary, now that we know how to train a smaller model that performs similarly to ChatGPT, it’s your turn to get hands-on with your own original data. Follow this notebook to train your custom model in Spanish, English, Italian, or focus on topics such as sports, politics, and more.

Find all the code in my github repo.

If you likes this post remember to follow me on twitter:

“Are you interested in learning more about data science and advancing your career?

Look no further than Pluralsight!

Don’t miss out on this opportunity to take your career to the next level.”

Visit: https://pluralsight.pxf.io/DVX5jy today to get started!